Software Development

Why “Baklava”?

July 1, 2025

For many years, Android versions have carried dessert names — Cupcake, Donut, Oreo… But the old alphabetical tradition has now been set aside. Calling Android 16 “Baklava” is both a nostalgic nod and a salute to our local culture, spotlighting the world-famous Turkish pastry. 🙂

Predictive Back Updates

When the user swipes to go back, the screen doesn’t just disappear instantly. Instead, Android shows a smooth transition using animations like fade-out or slide, depending on where the system knows you’re going back to. This makes the experience feel more natural. You can also add your own custom back animations without breaking the regular onBackInvoked flow.

onBackInvokedDispatcher.registerOnBackInvokedCallback(

PRIORITY_SYSTEM_NAVIGATION_SERVER,

OnBackInvokedCallback {

binding.root.animate().alpha( 0f ).setDuration( 150 )

.withEndAction { supportFragmentManager.popBackStack() }

}

)

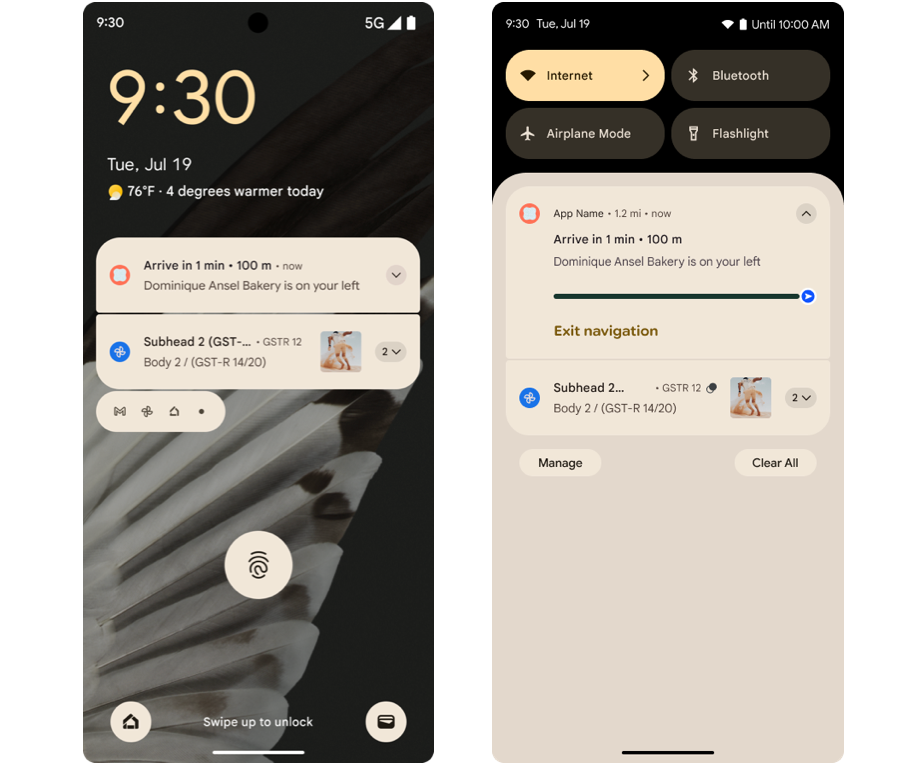

Progress-centric notifications

This feature helps you make your notifications more informative by showing the whole “start-to-end” process. So, for long tasks like downloads, delivery tracking, or navigation steps, users can clearly see which stage they’re in. With points and segments, you can highlight key moments and phases, making everything more predictable.

var ps =

Notification.ProgressStyle()

.setStyledByProgress( false )

.setProgress( 456 )

.setProgressTrackerIcon(Icon.createWithResource(appContext, R.drawable.ic_car_red))

.setProgressSegments(

listOf(

Notification.ProgressStyle.Segment( 41 ).setColor(Color.BLACK),

Notification.ProgressStyle.Segment( 552 ).setColor(Color.YELLOW),

Notification.ProgressStyle.Segment( 253 .setColor(Color.WHITE),

Notification.ProgressStyle.Segment( 94 ).setColor(Color.BLUE)

)

)

.setProgressPoints(

listOf(

Notification.ProgressStyle.Point( 60 ) ).setColor(Color.RED),

Notification.ProgressStyle.Point( 560 .setColor(Color.GREEN)

)

)

Richer tactile experiences

It gives you full control over the frequency and amplitude curves of the haptic actuator. You can create complex vibration patterns like “short-long-short” to match different actions. This helps you deliver consistent haptic feedback across devices from explosions in games to subtle confirmation taps on forms.

val pattern = longArrayOf( 0 , 60 , 40 , 120 )

val amplitudes = intArrayOf( 0 , 180 , 0 , 255 )

val effect = VibrationEffect.createWaveform(pattern, amplitudes, - 1 )

vibrator.vibrate(effect)

Content handling for live wallpapers

With WallpaperDescription and WallpaperInstance, you can define separate metadata for each live wallpaper variant, like lock screen or home screen. This makes it easier to assign different content to each one from the same wallpaper service. The picker UI also gets this metadata so users can select personalized versions more easily.

val desc = WallpaperDescription.Builder()

.setId( "sunrise" )

.setTitle( "sun" )

.setDescription(listOf( "" ))

.setThumbnail(sunriseThumbUri)

.setContextDescription( "Settings" )

.setContextUri(Uri.parse( "app://settings/sunrise" ))

.build()

val info = WallpaperManager.getInstance(ctx).getWallpaperInfo()

val instance = WallpaperInstance(info, desc)

WallpaperManager.getInstance(ctx)

.setWallpaperInstance(instance, WallpaperManager.FLAG_SYSTEM)

System-triggered profiling

Starting from Android 15, ProfilingManager can automatically trigger trace recordings during critical moments like cold starts or ANRs. Since the system handles when to start and stop tracing, it’s easier to capture important flows and integrate profiling into your CI/CD pipeline. All trace files are saved in your app’s data folder for analysis.

val pm = getSystemService(ProfilingManager::class.java)

pm.addProfilingTriggers(

ProfilingManager.TRIGGER_COLD_START or ProfilingManager.TRIGGER_ANR

)

Start component in ApplicationStartInfo

Introduced in Android 15, the getStartComponent() method clearly tells you what triggered your app launch — was it the launcher, a widget, a notification, or a service? Based on this info, you can change the screen or animation shown. It’s helpful for improving diagnostics and startup flow.

val startInfo = ApplicationStartInfo.getInstance(applicationContext)

val componentType = startInfo.getStartComponent() {

ApplicationStartInfo.START_COMPONENT_ACTIVITY -> {

navigateToHomeScreen()

}

ApplicationStartInfo.START_COMPONENT_BROADCAST_RECEIVER -> {

navigateToReceiverHandledScreen()

}

ApplicationStartInfo.START_COMPONENT_SERVICE -> {

navigateToServiceResultScreen()

}

ApplicationStartInfo.START_COMPONENT_UNKNOWN,

değilse -> {

navigateToDefaultScreen()

}

}

Better job introspection

New in Android 16, getPendingJobReasons(jobId) shows a list of reasons why a scheduled job is still pending whether it’s due to system restrictions or developer-defined constraints. You can also get the recent change history using getPendingJobReasonsHistory(). This helps you debug delays or failures in JobScheduler tasks.

// 1. Get JobScheduler instance

val jobScheduler = context.getSystemService(JobScheduler:: class .java)

// 2. Get the pending reasons for the job

val reasons: List< Int > = jobScheduler.getPendingJobReasons(MY_JOB_ID)

// 3. Check and log each reason

if (reasons.isEmpty()) {

Log.d( "JobDebug" , "Job can be run immediately." )

} else {

reasons.forEach { reason ->

when (reason) {

JobInfo.PENDING_JOB_REASON_BATTERY_SAVER_ENABLED ->

Log.w( "JobDebug" , "Battery saver mode enabled" )

JobInfo.PENDING_JOB_REASON_DEVICE_IDLE ->

Log.w( "JobDebug" , "Device is in Doze mode" )

JobInfo.PENDING_JOB_REASON_REQUIRE_CHARGING ->

Log.w( "JobDebug" , "Requires charging" )

JobInfo.PENDING_JOB_REASON_REQUIRE_UNMETERED_NETWORK ->

Log.w( "JobDebug" , "Requires unmetered network (Wi-Fi)" )

// ... other constants

else ->

Log.w( "JobDebug" , "Pending reason code: $reason " )

}

}

}

// 4. If you want to review the history of pending reasons

val history: List<PendingJobReason> =

jobScheduler.getPendingJobReasonsHistory(MY_JOB_ID)

history.forEach { entry ->

Log.d( "JobHistory" , " ${entry.timestamp} : reason= ${entry.reason} " )

}

Adaptive refresh rate

From Android 15, you can use hasArrSupport() and getSuggestedFrameRate() to adapt your app’s frame rate smoothly (e.g., to 60Hz, 90Hz, etc.) on supported devices. The method getSupportedRefreshRates() also returns, letting you list all possible refresh rates and choose the best one.

// 1. Get the view's SurfaceControl host

val host = myCustomView.host

// 2. Verify that adaptive refresh rate is supported

if (host.hasArrSupport()) {

// 3. List all supported refresh rates (e.g. [60.0, 90.0, 120.0, 144.0])

val rates: FloatArray = host.supportedRefreshRates

Log.d( "ARR" , "Supported rates: ${rates.joinToString()} " )

// 4. Ask the system for the best rate close to 60 fps

val optimalFps: Float = host.getSuggestedFrameRate( 60f )

// 5. Apply this frame rate to your view for smoother rendering

host.setFrameRate(optimalFps)

}

Headroom APIs in ADPF

In Android 16, you can now check how much free CPU and GPU power your device has using SystemHealthManager.getCpuHeadroom() and getGpuHeadroom(). You can also set parameters like time window or stat type using CpuHeadroomParams and GpuHeadroomParams to dynamically adjust quality during thermal throttling.

val shm = getSystemService(SystemHealthManager::class.java)

val params = CpuHeadroomParams.Builder()

.setDurationMillis(500)

.setStatType(CpuHeadroomParams.STAT_TYPE_AVERAGE)

.build()

val cpuHeadroom = shm.getCpuHeadroom(params)

Log.d("Performance", "CPU headroom: $cpuHeadroom%")

Improved accessibility APIs

Outline Text for Maximum Contrast

When high contrast mode is turned on in Android 16, the system adds a thin outline around text. This makes light-colored or small text easier to read on dark backgrounds without needing extra code from the developer.

val am = context.getSystemService(AccessibilityManager:: class .java)

// Check if high contrast outline mode is enabled

if (am.isOutlineTextEnabled) {

// Your custom text creation should draw an outline

myTextView.setOutlineThickness( 4f )

}

am.addOutlineTextStateChangeListener { enabled ->

myTextView.setOutlineThickness( if (enabled) 4f else 0f )

}

Duration in ttsSpan(TYPE_DURATION)

With TtsSpan.TYPE_DURATION, you can send duration info like “1 hour 30 minutes” to the Android TTS engine in a more natural format. It reads durations like “one and a half hours” instead of a flat number list, making it clearer in apps like fitness or education.

val durationSpan = TtsSpan.Builder(TtsSpan.TYPE_DURATION)

.putArgument(TtsSpan.DURATION, 5_000L ) // 5 second

.build()

val text = SpannableString( "time: 5 second" )

text.setSpan(durationSpan, 16 , 25 , Spanned.SPAN_EXCLUSIVE_EXCLUSIVE)

myTextView.text = text

Set ExtendedStatus

You can now use setExpandedState() and CONTENT_CHANGE_TYPE_EXPANDED to tell screen readers whether something is expanded or collapsed like dropdowns or accordions. This gives better context for visually impaired users.

addLabeledBy / getLabeledByList

You can link a UI element to more than one label, like a text, icon, and a voice button. This helps screen readers describe it more clearly by using all the labels together.

RANGE_TYPE_INDETERMINATE

When you don’t know how long a task will take (like loading data), you can use RANGE_TYPE_INDETERMINATE to tell accessibility services that progress is happening, even if no percentage is available.

Tri-state CheckBox

You can now add checkboxes with three states: checked, unchecked, and partially checked. This is useful when sub-items are selected in a mixed way, like in folder sync settings.

setSupplementalDescription

You can add extra info to a view using setSupplementalDescription() in addition to the regular contentDescription. This helps screen readers give users more context, like “75% done, updated 2 minutes ago”.

setFieldRequired

You can now mark form fields as required using setFieldRequired(true). Screen readers will inform users that the field is mandatory, reducing form errors.

Phone as microphone input for voice calls with LEA hearing aids

In Android 16, users with LE Audio hearing aids can now switch between the phone’s microphone and their hearing aid mic. They can also adjust ambient sound volume when using the hearing aid.

val audioManager = getSystemService(AudioManager::class.java)

val devices = audioManager.getDevices(AudioManager.GET_DEVICES_INPUTS)

val hearingAid = devices.firstOrNull { it.type == AudioDeviceInfo.TYPE_HEARING_AID }

hearingAid?.let {

audioManager.setPreferredDeviceForStrategy(

AudioAttributes.USAGE_VOICE_COMMUNICATION, it

)

}

Hybrid auto-exposure

A new Android 16 feature: The Camera2 API now supports a “hybrid AE” mode, giving you control over both ISO (sensor sensitivity) and shutter time at the same time. It offers a great balance between full manual and full auto modes useful for adjusting exposure and dynamic range in professional shoots.

// Enable AE priority on ISO

reqBuilder.set(

CaptureRequest.CONTROL_AE_MODE,

CameraMetadata.CONTROL_AE_MODE_ON

)

reqBuilder.set(

CaptureRequest.CONTROL_AE_PRIORITY_MODE,

CameraMetadata.CONTROL_AE_PRIORITY_MODE_SENSOR_SENSITIVITY_PRIORITY

)

reqBuilder.set(CaptureRequest.SENSOR_SENSITIVITY, 800)

Precise color temperature and tint adjustments

A new Android 16 feature: With COLOR_CORRECTION_MODE_CCT, you can go beyond built-in AWB modes and control color temperature (Kelvin) and tint at the pixel level. Perfect for film production or scenes where precise color matching is essential.

reqBuilder.set(

CaptureRequest.CONTROL_AWB_MODE,

CameraMetadata.CONTROL_AWB_MODE_OFF

)

reqBuilder.set(

CaptureRequest.COLOR_CORRECTION_MODE,

CameraMetadata.COLOR_CORRECTION_MODE_CCT

)

reqBuilder.set(

CaptureRequest.COLOR_CORRECTION_COLOR_TEMPERATURE,

5500

)

reqBuilder.set(

CaptureRequest.COLOR_CORRECTION_COLOR_TINT,

10

)

Night mode indicator

A new Android 16 feature: Using the EXTENSION_NIGHT_MODE_INDICATOR CaptureResult key, your app can detect whether the current scene calls for night mode. This lets you adjust UI or notify users when night enhancements are active.

val nightMode = result.get(CaptureResult.EXTENSION_NIGHT_MODE_INDICATOR)

if (nightMode == true) {

overlayView.showNightModeBanner()

}

Motion photo capture intents

A new Android 16 feature: The ACTION_MOTION_PHOTO_CAPTURE and ACTION_MOTION_PHOTO_CAPTURE_SECURE intents let you capture both a still photo and a short (3-second) video in one shot. You can play these using MediaMetadataRetriever or show a gif-style preview in your UI.

val intent = Intent(ACTION_MOTION_PHOTO_CAPTURE).apply {

putExtra(EXTRA_OUTPUT, outputUri)

}

startActivityForResult(intent, MOTION_PHOTO_REQUEST)

HEIC_ULTRAHDR support

A new Android 16 feature: The ImageFormat.HEIC_ULTRAHDR format lets you save Ultra-HDR photos using ISO 21496–1 spec parameters. It combines high dynamic range with efficient file compression.

val captureBuilder = cameraDevice.createCaptureRequest(TEMPLATE_STILL_CAPTURE)

captureBuilder.set(

CaptureRequest.JPEG_QUALITY, 100

)

captureBuilder.set(

CaptureRequest.JPEG_THUMBNAIL_SIZE, Size(0, 0)

)

captureBuilder.set(

CaptureRequest.IMAGE_FORMAT, ImageFormat.HEIC_ULTRAHDR

)

RangingManager (802.11az, BLE, UWB)

A new Android 16 feature: RangingManager lets you measure distances securely using AES-256 encryption, whether through BLE/RSSI or UWB. You get millimeter-level accuracy great for IoT, smart homes, or asset tracking.

val rm = getSystemService(RangingManager::class.java)

rm.startRanging(params, executor, object : RangingCallback() {

override fun onRangeResult(results: List<RangingResult>) {

results.forEach {

Log.d("Range", "Distance: ${it.distanceMm} mm")

}

}

})

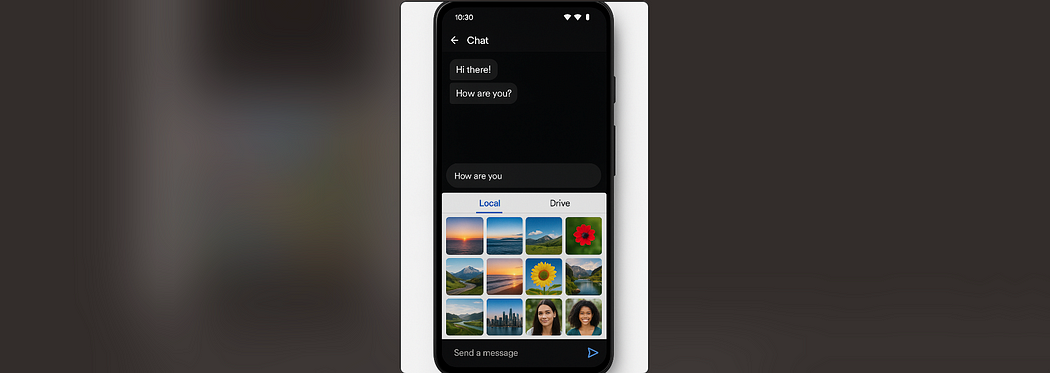

Embedded and Cloud Photo Selector

PhotoPicker.Builder now has two powerful updates:

- EmbeddedPresentation, you can embed the photo picker directly into your layout, instead of using a fullscreen modal.

- addSearchProvider(), you can let users search cloud storage like Google Drive or Dropbox alongside local media, all in one place

// 1. A mixin for layout pickers

val embeddedPresentation = EmbeddedPresentation(

context = this , // Activity or Fragment context

Container = findViewById(R.id.photo_picker_container) // Your layout container

)

// 2. Class with a cloud search provider definition

MyCloudProvider : SearchProvider {

override val id: String = "my_cloud"

override fun createIntent () : Intent {

// Return an Intent that starts your cloud browser's user interface

return Intent( this @MainActivity , CloudBrowserActivity:: class .java)

}

}

// 3. Configure PhotoPicker with both embedded and cloud support

val picker = PhotoPicker.Builder()

.setPresentation(embeddedPresentation) // Embed the picker in your layout

.addSearchProvider(MyCloudProvider()) // Add cloud search

.setMediaTypeFilter(MediaTypeFilter.IMAGES_ONLY) // Only images

.build()

// 4. Initialize the picker

startActivityForResult(picker.intent, REQUEST_PICK_IMAGE)

// 5. Run the result in onActivityResult

override fun onActivityResult (requestCode: Int , resultCode: Int , data : Intent ?) {

if (requestCode == REQUEST_PICK_IMAGE && resultCode == RESULT_OK) {

val uri: Uri = PhotoPicker.getPhotoUri( data !!)

// Show the selected image

imageView.setImageURI(uri)

}

}

Advanced Professional Video (APV)

A new Android 16 feature: The AVC 422–10 profile supports 10-bit color depth, YUV 4:2:2 sampling, and bitrates up to 2 Gbps. This enables pro-level recording, with multi-track video, alpha channels, and high frame rates.

val format = MediaFormat.createVideoFormat("video/avc", width, height)

format.setInteger(MediaFormat.KEY_PROFILE, MediaCodecInfo.CodecProfileLevel.AVCProfileHigh10)

format.setInteger(MediaFormat.KEY_LEVEL, MediaCodecInfo.CodecProfileLevel.AVCLevel51)

format.setInteger(MediaFormat.KEY_BIT_RATE, 2_000_000_000)

– Resources: https://developer.android.com/about/versions/16/features

Author: Uğur Can Işıldar